Large Language Models (LLMs) have become important tools across various tasks for me. From natural language understanding to generating creative content, those ability enables them to contribute to my problem-solving and decision-making processes. However, despite their remarkable capabilities, there are instances where LLMs may fall short of providing the optimal results. As a result, users may encounter scenarios where the LLM-generated content does not fully meet their expectations.

In addressing the quest for improved results, we often explore various methods to fine-tune or guide the LLM's output. For example, adjusting parameters or incorporating additional context are common approaches. Despite the availability of these strategies, there remains a crucial consideration: the cost-effectiveness of the chosen approach.

Among the strategies to optimize LLM outputs, prompting stands out as a cheaper method. While this method often yields superior results, it requires more effort on a trial and error approach than other methods.

Introduction

Let there be a teacher, denoted as P, and then Q the student. In a class sometimes when P wants to understand how Q understands a topic, he asks Q a question related to the topic. But Q can’t answer it correctly. Then P tries with different approach without losing any context of the original question. This time Q can answer it easily and correctly. The illustration above shows how the Prompting works.

Prompt Engineering involves carefully crafting input instructions to guide the model toward generating desired responses. We use prompt engineering to improve the capacity of LLMs on a wide range of common and complex tasks such as question answering and arithmetic reasoning. Developers use prompt engineering to design robust and effective prompting techniques that interface with LLMs and other tools [see].

Zero, One, and Few-Shot

Zero-shot prompt is a basic prompt to deal with LLM, we assume the model doesn’t have any prior knowledge of the task before and we give Zero examples. In short, it is like saying “just asking the question”.

Where should I cook a soup?

The one-shot prompt, as the name mentions, is a prompt with one example. The example here is always about showing the model how to answer, like in what format and what to expect.

We cook the scrambled egg in a pan. In that context, Where should I cook soup?

The few-shot prompt is the same technique as the other shots but with more examples. The model will more likely produce the answer with the style example with the more examples we have given [see].

We cook the scrambled egg in a pan. Meanwhile, We heat up the rice in a rice cooker. On the other hand, we pop the popcorn with a microwave. How about a soup?

Negative Prompting

This technique tries to rule out certain contexts within the question or task we give to the LLM model.

For example, when we ask ChatGPT about planes: how does a plane fly? Here is the response:

An airplane flies through a combination of aerodynamic principles, propulsion, and control systems. Here's a simplified explanation:

Aerodynamics: The shape of the wings (airfoil) is crucial for generating lift. As the airplane moves forward, air flows over the wings, creating a pressure difference. The shape of the wings, with a curved upper surface and a flatter lower surface, causes the air pressure above the wing to be lower than below, generating lift.

Engines/Propulsion: Most modern airplanes are powered by jet engines or propellers. Jet engines work on the principle of sucking in air, compressing it, mixing it with fuel, igniting the mixture, and expelling it at high speed through a nozzle. This creates thrust, propelling the plane forward.

Thrust and Drag: Thrust is the forward force generated by the engines, while drag is the resistance opposing the plane's forward motion. Balancing these forces is essential for maintaining a steady speed.

Gravity: Gravity pulls the airplane downward. Lift generated by the wings counteracts gravity, allowing the plane to stay airborne.

Control Surfaces: To control the airplane's orientation and direction, there are control surfaces such as ailerons, elevators, and rudders. Ailerons control roll (tilting left or right), elevators control pitch (nose up or down), and the rudder controls yaw (turning left or right).

In summary, an airplane achieves flight by combining lift from its wings, forward thrust from its engines, balance of forces, and control surfaces to navigate through the air.Meanwhile, when we specifically rule out certain contexts as follows: how does a plane fly without difficult terminology from aerodynamics? Here is the response

A plane stays in the air and moves forward because of its engines and wings. The engines provide the power needed to push the plane forward, and the wings are designed to lift the plane up. As the plane moves, the wings use the air to create an upward force (lift) that balances out the pull of gravity. This balance between forward movement from the engines and the upward force from the wings allows the plane to fly.Iterative Prompt

This is a prompt process where we refine the prompt based on the model outputs, slowly guiding the model to the desired answer or style of answer. This process allows the model to return a more specific or detailed response by building upon the previous exchange. It's a way to guide the conversation in a more targeted direction.

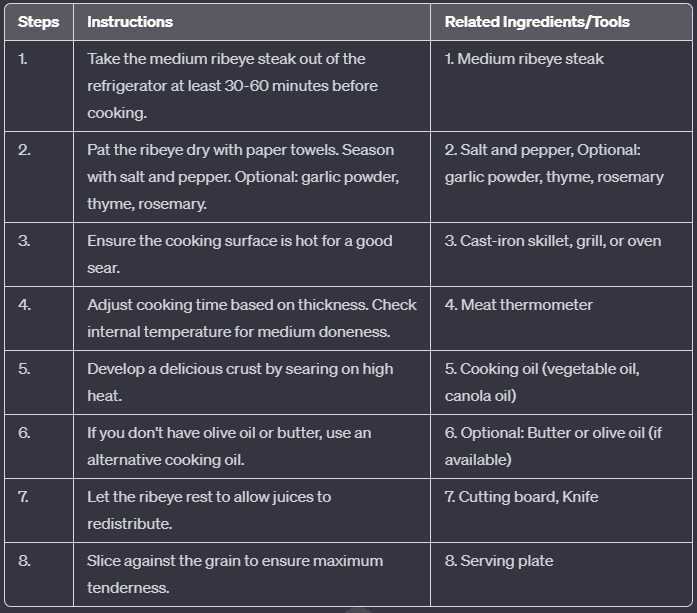

For example, we ask LLM about making a steak. The example below does not contain all the answers, it just shows the ideas on how to make the prompt.

Q: How to make a steak?

A: Making a good steak involves a combination of selecting the right cut, seasoning, and cooking method. Here's a basic guide to help you make a delicious steak — …

Q: From above instruction, I want to create a Medium Ribeye. Please revise

A: Certainly! Here's a revised set of instructions specifically tailored for making a medium ribeye steak — …

Q: I dont have any olive oil or butter,. can we replace it with others and revise the instruction above?

A: Certainly! If you don't have olive oil or butter, you can use alternative cooking oils such as vegetable oil or canola oil. Here's the revised set of instructions for making a medium ribeye without olive oil or butter — …

Q: Please make a table on the instruction and ingredients

Q: can we switch the column above

Q: Make 3 columns,. Steps, Instructions and then related ingredients or tools

Q: make column steps just contains the numbers

Q: delete the numbers on co0lumn ingredients/tools

Chain-of-Thought

This prompt asks the model to detail its thought process step-by-step and give more hints about it. This is particularly useful for complex reasoning tasks [see]. Intuitively we as humans break a larger task or problem into sub-tasks, and then we chain these sub-tasks together. Using the output of one sub-task as the input for the next sub-task.

Let’s try it with ChatGPT

Q: This year I am 35 years old, meanwhile, my brother is 5/7 of my age. At what age my brother was half of my age?

A: Let's denote your age as A and your brother's age as B.

You mentioned that you are 35 years old, so A = 35.

Your brother's age is 5/7 of your age, so B = (5/7) * A.

Now, let's find the age at which your brother was half of your age:

At that time, your brother's age (B) would be half of your age (A), so B = 0.5 * A.

Now, set up the equation and solve for A:

(5/7) * A = 0.5 * A

Multiply both sides by 7 to get rid of the fraction:

5 * A = 3.5 * A

Subtract 3.5 * A from both sides:

1.5 * A = 0

Divide both sides by 1.5 to solve for A:

A = 0

So, according to the given information, it seems there might be an issue, as the result suggests you are currently 0 years old. Please double-check the information provided.

The answer is definitely not correct but the answer already contains a Chain of Thoughts. Let’s ask the same question with more detailed information and ask for the process of how the model solves it.

Q: This year I am 35 years old, meanwhile, my brother is 5/7 of my age. At what age my brother was half of my age? please detail the process with points one by one. Remember right now my age is 35 and the proportion of age always changing over the year

A:

The answer is correct but the CoT process is still not quite right.

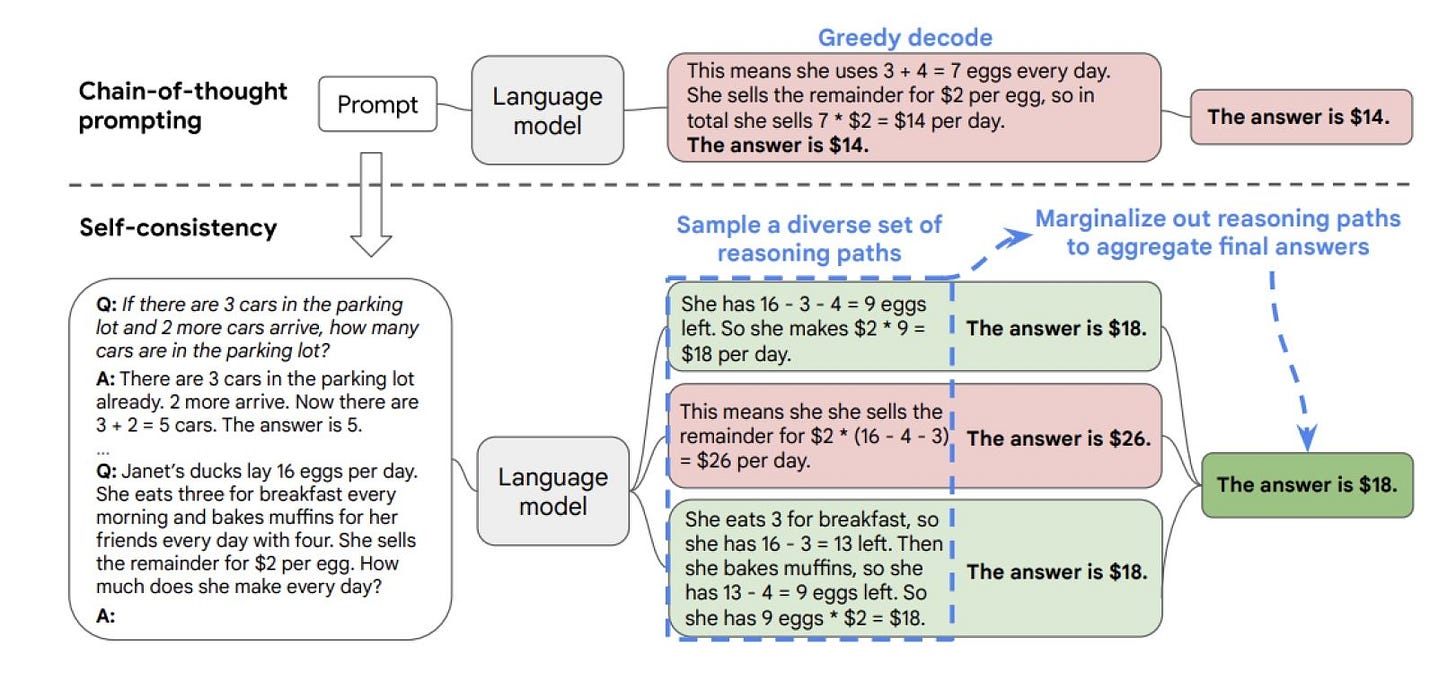

Self-Consistency

As the name explains, this prompt engineering tries to compare the different responses of CoT above and choose the consistent answer. Typically CoT admits multiple different ways of thinking leading to its unique correct answer. It first samples a diverse set of reasoning paths instead of only taking the greedy one and then selects the most consistent answer by marginalizing out the sampled reasoning paths [see].

Existing Tools

There are several applications or tools that help us craft a better prompt. Here are top 3 examples:

Agenta: Its open source tools that enable developers to work through various versions of prompts, parameters, and strategies to produce their desired outcome and model.

PromptPerfect: It provides a range of features, from multi-goal optimization to prompt-as-a-service functionality, and supports prompts in multiple languages.

PromptAppGPT: low-code prompt application development framework for language model application development.

Conclusion

In conclusion, Prompt Engineering comes up as a cost-effective strategy for enhancing LLMs, offering a more economical alternative compared to retraining entire models. While the affordability of this approach is evident, prompt engineering is not a straightforward process. We must be involved in a series of trial-and-error iterations to fine-tune and optimize prompts for optimal performance.

Additionally, although some specialized tools can aid in the prompt engineering process, their service may create additional expenses. Nevertheless, these tools remain a more economical choice when compared to retraining the entire model.