Due to the size of the model and computation resource to run inference on Large Language Models, it is almost impossible to run plain such model on personal computer. I have read many discussion, efforts, and tutorials on how to run large language model on small specification such as Macbook Pro M2 or personal computer that we are used to for gaming.

Some people mentioned they run the language model using Ollama. The moment when Llama2 released with stunning result and its capabilities. Llama2 also released several model sizes like 7B, 13B, and 70B params. But, I haven’t had opportunity to try it out on my computer. I usually rely on huggingface hub, but it wasn’t available due to the large of model weight. So, I come back again, scrolling on Reddit LocalLLama, and found a couple of users uses Ollama to run the model on low specs machine and today I have time to try it out.

Let’s get into it.

Note that I run Ollama using Llama2 7B quantize 4bit on Windows 11, WSL 2 Ubuntu 20.04, Intel i7, 32GB RAM, and GTX 1660 Super.

Installation

Visit Ollama download page

Here, I download Ollama for Linux

curl -fsSL https://ollama.com/install.sh | shUp and Running

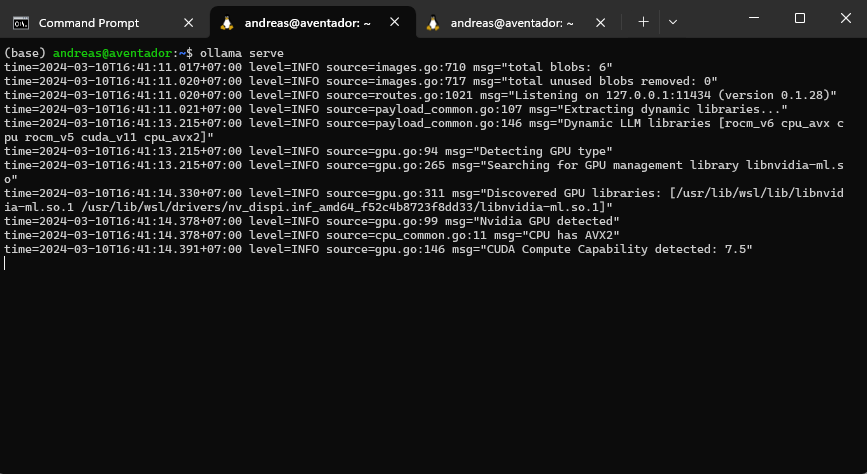

You need to open 2 window terminal.

Run

ollama servein the second window, run the program using llama2

ollama run llama2Then, you can write prompt as you usually do in ChatGPT or any chatbot platform

Endpoint

When running the Ollama, you can also access the model using endpoint API.

curl http://localhost:11434/api/generate -d '{

"model": "llama2",

"prompt":"Why is the sky blue?"

}'And it will return a bunch of responses

{"model":"llama2","created_at":"2024-03-10T14:45:25.213304356Z","response":"\n","done":false}

{"model":"llama2","created_at":"2024-03-10T14:45:25.265805241Z","response":"B","done":false}

{"model":"llama2","created_at":"2024-03-10T14:45:25.315169474Z","response":"ird","done":false}

{"model":"llama2","created_at":"2024-03-10T14:45:25.362810377Z","response":"s","done":false}

{"model":"llama2","created_at":"2024-03-10T14:45:25.411185593Z","response":" fly","done":false}

{"model":"llama2","created_at":"2024-03-10T14:45:25.460384223Z","response":" by","done":false}

{"model":"llama2","created_at":"2024-03-10T14:45:25.50764932Z","response":" using","done":false}

{"model":"llama2","created_at":"2024-03-10T14:45:25.555239322Z","response":" their","done":false}

{"model":"llama2","created_at":"2024-03-10T14:45:25.603178331Z","response":" wings","done":false}

{"model":"llama2","created_at":"2024-03-10T14:45:25.655208408Z","response":" to","done":false}

{"model":"llama2","created_at":"2024-03-10T14:45:25.707275287Z","response":" generate","done":false}

{"model":"llama2","created_at":"2024-03-10T14:45:25.757585435Z","response":" lift","done":false}

{"model":"llama2","created_at":"2024-03-10T14:45:25.805507943Z","response":",","done":false}

{"model":"llama2","created_at":"2024-03-10T14:45:25.85393056Z","response":" which","done":false}

{"model":"llama2","created_at":"2024-03-10T14:45:25.902690282Z","response":" is","done":false}

{"model":"llama2","created_at":"2024-03-10T14:45:25.951120599Z","response":" the","done":false}

...which apparently, you can flush the output the the web browser as it commonly appears on the chat platform.